Data Quality Part 6: What Have We Learned

Posted: October 4th, 2023

Authors: Gene Y. Aditya S.

We’ve made it through 5 articles in this series (with a bunch of stories and analogies along the way). We started this journey with this sentence:

Defining data quality, and implementing a data quality program, furthers the goal that the data collected serve the intended purpose, i.e., informed decision making.

So, it’s probably a good idea for us to review what we’ve explored:

- Our deck building story taught us that there are multiple ways to measure the same parameter, and the selection of tools and procedures must be appropriate to the use of the specific measurement.

- Our bathroom scale story spoke to the difficulty in assessing accuracy when measuring an unknown, and the need to manage the framework of the measurement (time and date, etc.).

- Our tire pressure story taught us about using reliable, repeatable tools, and making measurements that are meaningful to our specific question.

- Our methamphetamine story taught us about sensitivity of measurements and the use of numbers of different magnitudes and significant figures.

- Our search for a specific screw taught us about “magnitudes” of measurement and that the answer to the same question might or might not be useful.

- Further, our search for a screw taught us that we want our measurement robust at the point of “pass/fail” and that the measurement will be “less robust” at points away from “pass/fail.” It follows that any use of data for purposes other than the original intent must be carefully considered as to usability and applicability.

- We need to think about calendar ─ this might be driven by a permit or regulatory compliance date.

- “When” also refers to “when” during a process cycle, or during whatever activity is being investigated, in this case, when the facility is operating “normally” (whatever that means).

- “When” (or duration) could be driven by the need for additional sensitivity, so a longer sample duration, or to make sure we cover an entire process cycle.

- And, of course, “when” is also driven by time of day, and weather, and access, and available resources. Clearly, in this case, we need the wind to be blowing in the correct direction.

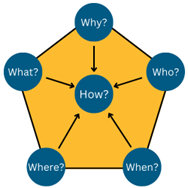

Let’s finish this with connecting “when” to our components of data quality: representativeness (making sure the facility is operating), sensitivity (making sure we spend enough time to get an appropriate sample). Similar assessments will go for the other 5 questions (why, what, etc.). Thinking about these connections is left as an exercise to the reader.

We want our collected data to serve an intended purpose (i.e., informed decision making). The quantitative and qualitative aspects of data quality presented in this series of articles are necessary to collect data of appropriate quality, and more to the point, usability relative to the decision-making. The level of accuracy, precision, and sensitivity is driven by the goal of the data collection exercise.

Smaller data sets must rely on ‘external’ factors to assess and provide acceptable data quality. The smaller the data set, the more critical it becomes to define methodologies and practices for each measurement. Remember – we want to establish data quality requirements (and objectives) and these need to be aligned with the measurement goal (the question of interest).

We’ve spent six articles trying to link a critical and twisty technical topic to some straightforward and easily understandable analogies and anecdotes. Of course, this all does lead to a whole lot of detailed and fussy technical tools used for project implementation and management. Here’s a high-level list of tools and practices that could and should be addressed to support data quality for small data sets:

- Planning

- Sample Collection

- Sample Preservation and Transport

- Sample Preparation and Analysis

- Mass Balance and General Engineering Principles

- Reporting (sampling, analysis, process)

- Training

- Data Controls and Management

- Data Audits

- Supplemental documentation

Thank you for joining us on this journey to discuss and explore measurement and data quality. We tried to use some analogies and some stories to break down, unpack, and clarify this often overlooked, but critical, aspect of measurement. If you want to explore this topic more, or you have specific questions, we are happy to talk with you. Please reach out to:

- Gene Youngerman, PhD Chemist and unrepentant science geek, 40 years’ experience doing stack testing and complex measurement programs. gyoungerman@all4inc.com, 512.649.2571

- Aditya Shivkumar, MS Environmental Engineer and Data enthusiast, 9 years’ experience in data handling, statistical analysis, and environmental compliance. ashivkumar@all4inc.com, 281‑201-1239

Links to other blogs from our Data Quality Series: